Automated changes by [create-pull-request](https://github.com/peter-evans/create-pull-request) GitHub action Signed-off-by: Github Actions <133988544+victoriametrics-bot@users.noreply.github.com> Co-authored-by: AndrewChubatiuk <3162380+AndrewChubatiuk@users.noreply.github.com> |

||

|---|---|---|

| .. | ||

| img | ||

| _changelog.md | ||

| _index.md | ||

| CHANGELOG.md | ||

| README.md | ||

A Helm chart for Running VMCluster on Multiple Availability Zones

Prerequisites

-

Install the follow packages:

git,kubectl,helm,helm-docs. See this tutorial. -

PV support on underlying infrastructure.

-

Multiple availability zones.

Chart Details

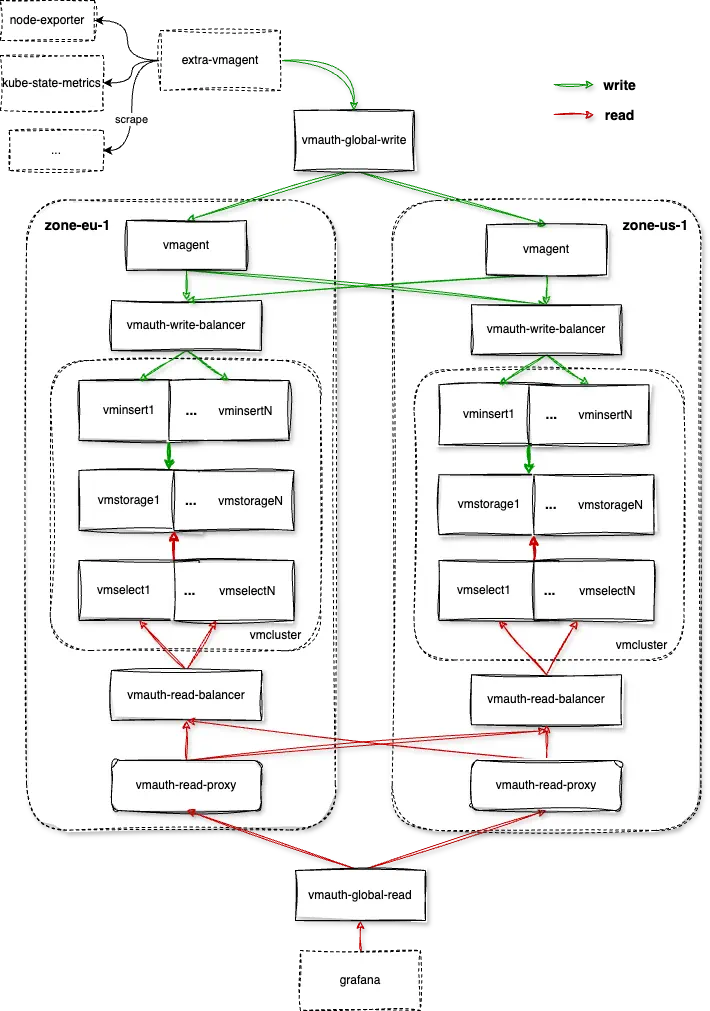

This chart sets up multiple VictoriaMetrics cluster instances on multiple availability zones, provides both global write and read entrypoints.

The default setup is as shown below:

For write:

- extra-vmagent(optional): scrapes external targets and all the components installed by this chart, sends data to global write entrypoint.

- vmauth-global-write: global write entrypoint, proxies requests to one of the zone

vmagentwithleast_loadedpolicy. - vmagent(per-zone): remote writes data to availability zones that enabled

.Values.availabilityZones[*].write.allow, and buffer data on disk when zone is unavailable to ingest. - vmauth-write-balancer(per-zone): proxies requests to vminsert instances inside it's zone with

least_loadedpolicy. - vmcluster(per-zone): processes write requests and stores data.

For read:

- vmcluster(per-zone): processes query requests and returns results.

- vmauth-read-balancer(per-zone): proxies requests to vmselect instances inside it's zone with

least_loadedpolicy. - vmauth-read-proxy(per-zone): uses all the

vmauth-read-balanceras servers if zone has.Values.availabilityZones[*].read.allowenabled, always prefer "local"vmauth-read-balancerto reduce cross-zone traffic withfirst_availablepolicy. - vmauth-global-read: global query entrypoint, proxies requests to one of the zone

vmauth-read-proxywithfirst_availablepolicy. - grafana(optional): uses

vmauth-global-readas default datasource.

Note: As the topology shown above, this chart doesn't include components like vmalert, alertmanager, etc by default. You can install them using dependency victoria-metrics-k8s-stack or having separate release.

Why use victoria-metrics-distributed chart?

One of the best practice of running production kubernetes cluster is running with multiple availability zones. And apart from kubernetes control plane components, we also want to spread our application pods on multiple zones, to continue serving even if zone outage happens.

VictoriaMetrics supports data replication natively which can guarantees data availability when part of the vmstorage instances failed. But it doesn't works well if vmstorage instances are spread on multiple availability zones, since data replication could be stored on single availability zone, which will be lost when zone outage happens. To avoid this, vmcluster must be installed on multiple availability zones, each containing a 100% copy of data. As long as one zone is available, both global write and read entrypoints should work without interruption.

How to write data?

The chart provides vmauth-global-write as global write entrypoint, it supports push-based data ingestion protocols as VictoriaMetrics does.

Optionally, you can push data to any of the per-zone vmagents, and they will replicate the received data across zones.

How to query data?

The chart provides vmauth-global-read as global read entrypoint, it picks the first available zone (see first_available policy) as it's preferred datasource and switches automatically to next zone if first one is unavailable, check vmauth first_available for more details.

If you have services like vmalert or Grafana deployed in each zone, then configure them to use local vmauth-read-proxy. Per-zone vmauth-read-proxy always prefers "local" vmcluster for querying and reduces cross-zone traffic.

You can also pick other proxies like kubernetes service which supports Topology Aware Routing as global read entrypoint.

What happens if zone outage happen?

If availability zone zone-eu-1 is experiencing an outage, vmauth-global-write and vmauth-global-read will work without interruption:

vmauth-global-writestops proxying write requests tozone-eu-1automatically;vmauth-global-readandvmauth-read-proxystops proxying read requests tozone-eu-1automatically;vmagentonzone-us-1fails to send data tozone-eu-1.vmauth-write-balancer, starts to buffer data on disk(unless-remoteWrite.disableOnDiskQueueis specified, which is not recommended for this topology); To keep data completeness for all the availability zones, make sure you have enough disk space on vmagent for buffer, see this doc for size recommendation.

And to avoid getting incomplete responses from zone-eu-1 which gets recovered from outage, check vmagent on zone-us-1 to see if persistent queue has been drained. If not, remove zone-eu-1 from serving query by setting .Values.availabilityZones.{zone-eu-1}.read.allow=false and change it back after confirm all data are restored.

How to use multitenancy?

By default, all the data that written to vmauth-global-write belong to tenant 0. To write data to different tenants, set .Values.enableMultitenancy=true and create new tenant users for vmauth-global-write.

For example, writing data to tenant 1088 with following steps:

- create tenant VMUser for vmauth

vmauth-global-writeto use:

apiVersion: operator.victoriametrics.com/v1beta1

kind: VMUser

metadata:

name: tenant-1088-rw

labels:

tenant-test: "true"

spec:

targetRefs:

- static:

## list all the zone vmagent here

url: "http://vmagent-vmagent-zone-eu-1:8429"

url: "http://vmagent-vmagent-zone-us-1:8429"

paths:

- "/api/v1/write"

- "/prometheus/api/v1/write"

- "/write"

- "/api/v1/import"

- "/api/v1/import/.+"

target_path_suffix: /insert/1088/

username: tenant-1088

password: secret

Add extra VMUser selector in vmauth vmauth-global-write

spec:

userSelector:

matchLabels:

tenant-test: "true"

- send data to

vmauth-global-writeusing above token. Example command using vmagent:

/path/to/vmagent -remoteWrite.url=http://vmauth-vmauth-global-write-$ReleaseName-vm-distributed:8427/prometheus/api/v1/write -remoteWrite.basicAuth.username=tenant-1088 -remoteWrite.basicAuth.password=secret

How to install

Access a Kubernetes cluster.

Setup chart repository (can be omitted for OCI repositories)

Add a chart helm repository with follow commands:

helm repo add vm https://victoriametrics.github.io/helm-charts/

helm repo update

List versions of vm/victoria-metrics-distributed chart available to installation:

helm search repo vm/victoria-metrics-distributed -l

Install victoria-metrics-distributed chart

Export default values of victoria-metrics-distributed chart to file values.yaml:

-

For HTTPS repository

helm show values vm/victoria-metrics-distributed > values.yaml -

For OCI repository

helm show values oci://ghcr.io/victoriametrics/helm-charts/victoria-metrics-distributed > values.yaml

Change the values according to the need of the environment in values.yaml file.

Test the installation with command:

-

For HTTPS repository

helm install vmd vm/victoria-metrics-distributed -f values.yaml -n NAMESPACE --debug --dry-run -

For OCI repository

helm install vmd oci://ghcr.io/victoriametrics/helm-charts/victoria-metrics-distributed -f values.yaml -n NAMESPACE --debug --dry-run

Install chart with command:

-

For HTTPS repository

helm install vmd vm/victoria-metrics-distributed -f values.yaml -n NAMESPACE -

For OCI repository

helm install vmd oci://ghcr.io/victoriametrics/helm-charts/victoria-metrics-distributed -f values.yaml -n NAMESPACE

Get the pods lists by running this commands:

kubectl get pods -A | grep 'vmd'

Get the application by running this command:

helm list -f vmd -n NAMESPACE

See the history of versions of vmd application with command.

helm history vmd -n NAMESPACE

How to upgrade

In order to serving query and ingestion while upgrading components version or changing configurations, it's recommended to perform maintenance on availability zone one by one.

First, performing update on availability zone zone-eu-1:

- remove

zone-eu-1from serving query by setting.Values.availabilityZones.{zone-eu-1}.read.allow=false; - run

helm upgrade vm-dis -n NAMESPACEwith updated configurations forzone-eu-1invalues.yaml; - wait for all the components on zone

zone-eu-1running; - wait

zone-us-1vmagent persistent queue forzone-eu-1been drained, addzone-eu-1back to serving query by setting.Values.availabilityZones.{zone-eu-1}.read.allow=true.

Then, perform update on availability zone zone-us-1 with the same steps1~4.

Upgrade to 0.5.0

This release was refactored, names of the parameters was changed:

vmauthIngestGlobalwas changed towrite.global.vmauthvmauthQueryGlobalwas changed toread.global.vmauthavailabilityZones[*].allowIngestwas changed toavailabilityZones[*].write.allowavailabilityZones[*].allowReadwas changed toavailabilityZones[*].read.allowavailabilityZones[*].nodeSelectorwas moved toavailabilityZones[*].common.spec.nodeSelectoravailabilityZones[*].extraAffinitywas moved toavailabilityZones[*].common.spec.affinityavailabilityZones[*].topologySpreadConstraintswas moved toavailabilityZones[*].common.spec.topologySpreadConstraintsavailabilityZones[*].vmauthIngestwas moved toavailabilityZones[*].write.vmauthavailabilityZones[*].vmauthQueryPerZonewas moved toavailabilityZones[*].read.perZone.vmauthavailabilityZones[*].vmauthCrossAZQuerywas moved toavailabilityZones[*].read.crossZone.vmauth

Example:

If before an upgrade you had given below configuration

vmauthIngestGlobal:

spec:

extraArgs:

discoverBackendIPs: "true"

vmauthQueryGlobal:

spec:

extraArgs:

discoverBackendIPs: "true"

availabilityZones:

- name: zone-eu-1

vmauthIngest:

spec:

extraArgs:

discoverBackendIPs: "true"

vmcluster:

spec:

retentionPeriod: "14"

after upgrade it will look like this:

write:

global:

vmauth:

spec:

extraArgs:

discoverBackendIPs: "true"

read:

global:

vmauth:

spec:

extraArgs:

discoverBackendIPs: "true"

availabilityZones:

- name: zone-eu-1

write:

vmauth:

spec:

extraArgs:

discoverBackendIPs: "true"

vmcluster:

spec:

retentionPeriod: "14"

How to uninstall

Remove application with command.

helm uninstall vmd -n NAMESPACE

Documentation of Helm Chart

Install helm-docs following the instructions on this tutorial.

Generate docs with helm-docs command.

cd charts/victoria-metrics-distributed

helm-docs

The markdown generation is entirely go template driven. The tool parses metadata from charts and generates a number of sub-templates that can be referenced in a template file (by default README.md.gotmpl). If no template file is provided, the tool has a default internal template that will generate a reasonably formatted README.

Parameters

The following tables lists the configurable parameters of the chart and their default values.

Change the values according to the need of the environment in victoria-metrics-distributed`/values.yaml file.

| Key | Type | Default | Description |

|---|---|---|---|

| availabilityZones | list | |

Config for all availability zones. Each element represents custom zone config, which overrides a default one from |

| availabilityZones[0].name | string | |

Availability zone name |

| availabilityZones[1].name | string | |

Availability zone name |

| common.vmagent.spec | object | |

Common VMAgent spec, which can be overridden by each VMAgent configuration. Available parameters can be found here |

| common.vmauth.spec.port | string | |

|

| common.vmcluster.spec | object | |

Common VMCluster spec, which can be overridden by each VMCluster configuration. Available parameters can be found here |

| enableMultitenancy | bool | |

Enable multitenancy mode see here |

| extra | object | |

Set up an extra vmagent to scrape all the scrape objects by default, and write data to above write-global endpoint. |

| fullnameOverride | string | |

Overrides the chart’s computed fullname. |

| global | object | |

Global chart properties |

| global.cluster.dnsDomain | string | |

K8s cluster domain suffix, uses for building storage pods’ FQDN. Details are here |

| nameOverride | string | |

Overrides the chart’s name |

| read.global.vmauth.enabled | bool | |

Create vmauth as the global read entrypoint |

| read.global.vmauth.name | string | |

Override the name of the vmauth object |

| read.global.vmauth.spec | object | |

Spec for VMAuth CRD, see here |

| victoria-metrics-k8s-stack | object | |

Set up vm operator and other resources like vmalert, grafana if needed |

| write.global.vmauth.enabled | bool | |

Create a vmauth as the global write entrypoint |

| write.global.vmauth.name | string | |

Override the name of the vmauth object |

| write.global.vmauth.spec | object | |

Spec for VMAuth CRD, see here |

| zoneTpl | object | |

Default config for each availability zone components, including vmagent, vmcluster, vmauth etc. Defines a template for each availability zone, which can be overridden for each availability zone at |

| zoneTpl.common.spec | object | |

Common for VMAgent, VMAuth, VMCluster spec params, like nodeSelector, affinity, topologySpreadConstraint, etc |

| zoneTpl.read.allow | bool | |

Allow data query from this zone through global query endpoint |

| zoneTpl.read.crossZone.vmauth.enabled | bool | |

Create a vmauth with all the zone with |

| zoneTpl.read.crossZone.vmauth.name | string | |

Override the name of the vmauth object |

| zoneTpl.read.crossZone.vmauth.spec | object | |

Spec for VMAuth CRD, see here |

| zoneTpl.read.perZone.vmauth.enabled | bool | |

Create vmauth as a local read endpoint |

| zoneTpl.read.perZone.vmauth.name | string | |

Override the name of the vmauth object |

| zoneTpl.read.perZone.vmauth.spec | object | |

Spec for VMAuth CRD, see here |

| zoneTpl.vmagent.annotations | object | |

VMAgent remote write proxy annotations |

| zoneTpl.vmagent.enabled | bool | |

Create VMAgent remote write proxy |

| zoneTpl.vmagent.name | string | |

Override the name of the vmagent object |

| zoneTpl.vmagent.spec | object | |

Spec for VMAgent CRD, see here |

| zoneTpl.vmcluster.enabled | bool | |

Create VMCluster |

| zoneTpl.vmcluster.name | string | |

Override the name of the vmcluster, by default is |

| zoneTpl.vmcluster.spec | object | |

Spec for VMCluster CRD, see here |

| zoneTpl.write.allow | bool | |

Allow data ingestion to this zone |

| zoneTpl.write.vmauth.enabled | bool | |

Create vmauth as a local write endpoint |

| zoneTpl.write.vmauth.name | string | |

Override the name of the vmauth object |

| zoneTpl.write.vmauth.spec | object | |

Spec for VMAuth CRD, see here |